Turn AI pilots into production systems that compound value

We partner with CEOs and CXOs to ship production-grade AI systems—aligned with strategy, running in your cloud, evolving with the technology.

How we help

Strategy

Clarify where AI creates advantage—what to build, when, and how it shows up in your P&L.

Agents

Capture how your best teams work—their decisions, workflows, and expertise—and encode it into AI agents that get things done.

Production

Take your AI proof-of-concepts to production-ready systems, then transfer ownership to your team with hands-on AI upskilling.

Platform

Build secure, production-grade infrastructure with monitoring, governance, and room to scale.

Strategy

Clarify where AI creates advantage—what to build, when, and how it shows up in your P&L.

Fixed-scope projects

Tightly scoped engagements (25–50k, 3–6 weeks) designed to unlock one critical capability you can build on.

Portfolio & Operating Model Sprint

Align leadership on where AI creates advantage

Strategic assessment that aligns AI investment with business value and organizational readiness.

$25,000–$40,000

3–4 weeks

We work with your CXO team to map current AI initiatives, identify high-leverage opportunities, and design an AI operating model that fits your strategy, risk profile, and technology landscape.

The engagement includes stakeholder interviews, portfolio assessment, capability mapping, and roadmap development. Deliverables include a prioritized portfolio of use cases, a 12-month implementation roadmap, and an operating model framework with clear roles, governance, and success metrics.

Organizations investing $1M+ in AI who need strategic clarity before scaling initiatives.

Portfolio & Operating Model Sprint

Align leadership on where AI creates advantage

Strategic assessment that aligns AI investment with business value and organizational readiness.

We work with your CXO team to map current AI initiatives, identify high-leverage opportunities, and design an AI operating model that fits your strategy, risk profile, and technology landscape.

The engagement includes stakeholder interviews, portfolio assessment, capability mapping, and roadmap development. Deliverables include a prioritized portfolio of use cases, a 12-month implementation roadmap, and an operating model framework with clear roles, governance, and success metrics.

$25,000–$40,000

3–4 weeks

Organizations investing $1M+ in AI who need strategic clarity before scaling initiatives.

Long-term Projects

A progression of engagement models—from discovery through to running a fleet in your cloud.

"Pathfinder"

POC that scouts value fast

Starting at $24,000

2-4 weeks

"Pathfinder" engagements help organizations move past uncertainty by creating product-focused prototypes that demonstrate what AI-powered offerings could deliver. These are not technical experiments—they're strategic tools designed to build stakeholder confidence and secure investment for next-stage development.

We work with your leadership team to identify high-potential use cases, architect scalable technical approaches, and build working prototypes that showcase meaningful business impact. The deliverable is a functioning demonstration that excites internal stakeholders while providing clear guidance on implementation requirements, timeline, and expected returns.

Organizations beginning their AI journey, or those seeking to validate specific use cases before committing to full development.

"Pathfinder"

POC that scouts value fast

"Pathfinder" engagements help organizations move past uncertainty by creating product-focused prototypes that demonstrate what AI-powered offerings could deliver. These are not technical experiments—they're strategic tools designed to build stakeholder confidence and secure investment for next-stage development.

We work with your leadership team to identify high-potential use cases, architect scalable technical approaches, and build working prototypes that showcase meaningful business impact. The deliverable is a functioning demonstration that excites internal stakeholders while providing clear guidance on implementation requirements, timeline, and expected returns.

Starting at $24,000

2-4 weeks

Organizations beginning their AI journey, or those seeking to validate specific use cases before committing to full development.

Selected Work

AI infrastructure, MLOps platforms, and production systems built for startups and enterprises.

Knowledge workers needed a way to automate complex, multi-step document analysis and content generation workflows that required more than simple chat interactions. Existing AI tools lacked the ability to capture repeatable business logic, connect to external data sources, and execute sophisticated workflows reliably at scale with enterprise-grade security.

Founded AI Hero (Delaware C Corp) and built a notebook-style workflow automation platform enabling users to create, customize, and execute AI-powered workflows. Architected a fully scalable Kubernetes infrastructure where each request spawned isolated pods for secure, parallel processing. Implemented comprehensive authentication, workflow orchestration, and chat capabilities with a focus on enterprise security requirements.

Achieved SOC 2 Type 2 compliance, providing enterprise customers with the security assurance needed for AI adoption. The scalable pod-based architecture enabled reliable execution of complex workflows involving PDF analysis, web scraping, and multi-step reasoning—transforming AI Hero into an enterprise-ready workflow automation platform.

Note: I'm a founder of A.I. Hero, Inc.

Knowledge workers needed a way to automate complex, multi-step document analysis and content generation workflows that required more than simple chat interactions. Existing AI tools lacked the ability to capture repeatable business logic, connect to external data sources, and execute sophisticated workflows reliably at scale with enterprise-grade security.

Founded AI Hero (Delaware C Corp) and built a notebook-style workflow automation platform enabling users to create, customize, and execute AI-powered workflows. Architected a fully scalable Kubernetes infrastructure where each request spawned isolated pods for secure, parallel processing. Implemented comprehensive authentication, workflow orchestration, and chat capabilities with a focus on enterprise security requirements.

Achieved SOC 2 Type 2 compliance, providing enterprise customers with the security assurance needed for AI adoption. The scalable pod-based architecture enabled reliable execution of complex workflows involving PDF analysis, web scraping, and multi-step reasoning—transforming AI Hero into an enterprise-ready workflow automation platform.

Note: I'm a founder of A.I. Hero, Inc.

Recent Writings

Insights on AI strategy, implementation, and the evolving landscape of enterprise AI.

Claude Code Plan Mode: Think Before You Code

Plan mode transforms how developers work with AI coding assistants by enabling structured planning before implementation. Instead of diving straight into code, you collaborate on architecture, explore trade-offs, and align on approach first.

Dec 17, 2025

Popular MCP Servers Developers Actually Use in 2025

Analysis of 1,000+ Reddit developer comments reveals the MCP servers that matter and how developers integrate them into VS Code, Cursor, and JetBrains workflows. From the essential trinity to multi-model review loops, a practical guide to MCP in real developer environments.

Dec 2, 2025

MCP v2025-11-25: What's Actually New

A practical look at the November 2025 MCP specification update: OAuth improvements, tool calling in sampling, structured elicitation, and the experimental tasks utility.

Dec 2, 2025

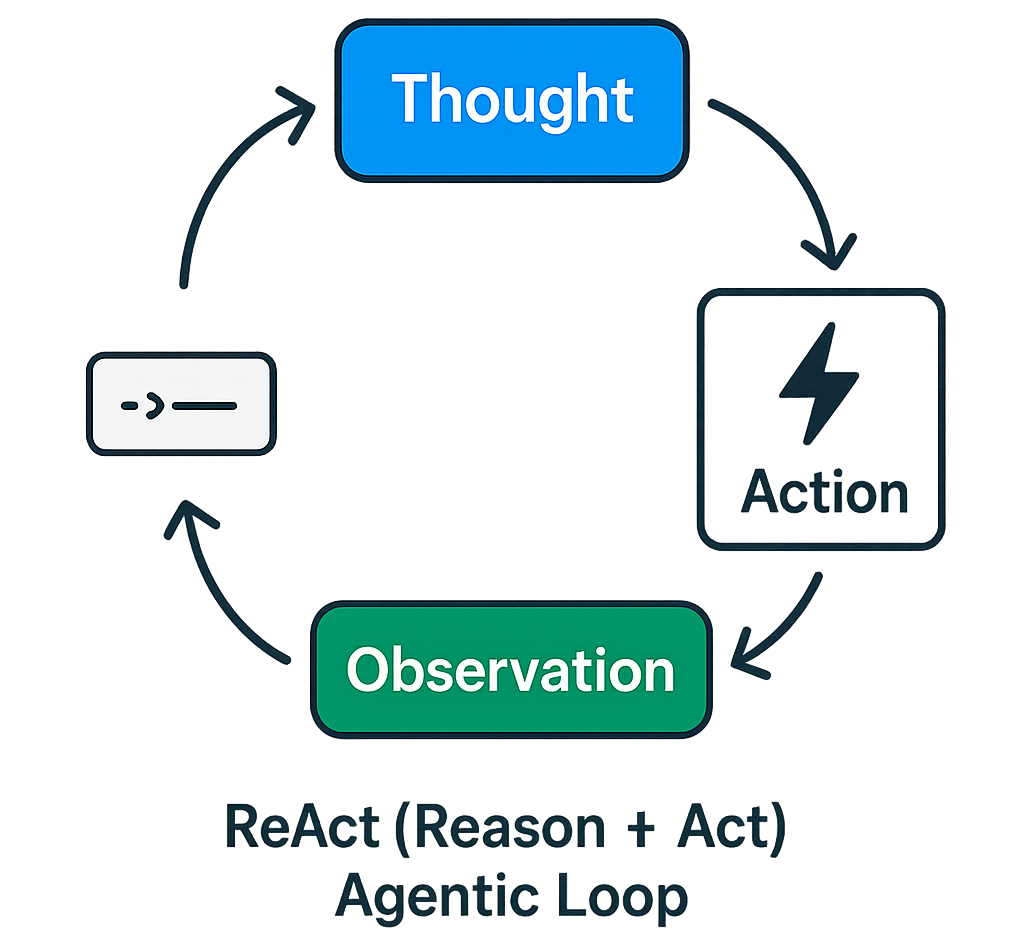

The Agentic Loop: How AI Agents Actually Think

Understanding the core architecture pattern behind modern AI agents—from basic reasoning loops to production implementations in Claude and GPT.

Oct 29, 2025

Let's Talk

Ready to turn AI pilots into production systems? Let's discuss how we can help.